Polyphony Digital was recently revealed Gran Turismo Sophiea new artificial intelligence driving system that will appear in Gran Turismo 7. The technology was developed in collaboration with the 25-person team at Sony AI, using the latest advances in machine learning. The team’s research was published in nature And GT Sophy tested (and defeated!) some of the world’s best Gran Turismo drivers in Live event in Tokyo last year.

However, the GT Sophy reveal raised nearly as many questions as it answered. How exactly does the technology work? How will it actually be integrated? GT7And what kind of restrictions are there?

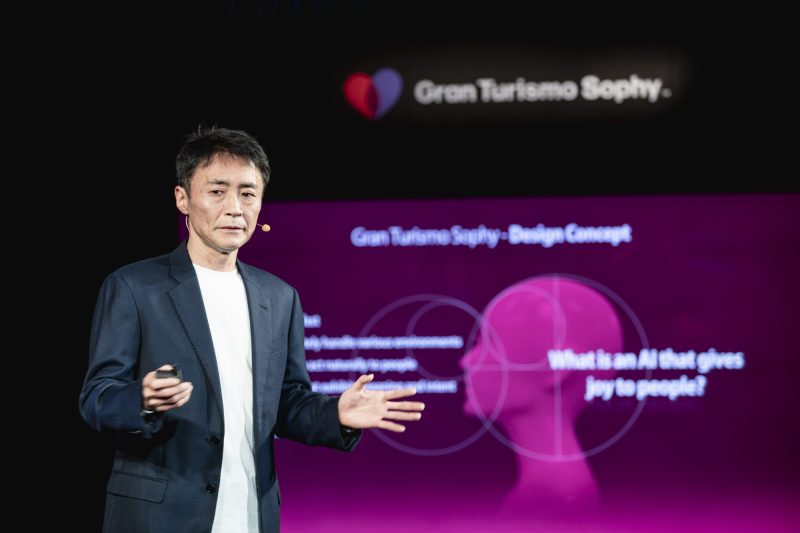

To help answer all of these questions, we’ve studied nature The publication spoke with Gran Turismo series creator Kazunori Yamauchi and Sony AI America Director Peter Wurman in an exclusive interview. This is what we learned.

How does Sophie actually work

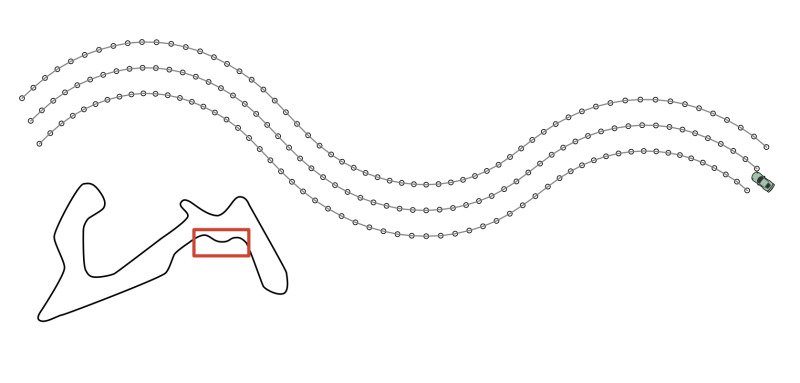

As a “player”, Sophie sees the virtual Gran Turismo environment as a static map, with the left, right, and center lines defined as 3D points. The track in front of the Sophy is divided into 60 equally spaced segments, with the length of each segment calculated dynamically by the vehicle’s speed. Each clip represents approximately 6 seconds of travel at any one time.

Sophy also has access to certain information about what the car is doing in its environment, including 3D velocity, angular speed, acceleration, load on each tire, and tire slip angles. It is also aware of the vehicle’s progress along the track, the slope of the track surface, the vehicle’s direction to the center line of the track and the front edges. Sophy is notified by the game if the vehicle contacts or travels outside the game’s default track boundaries.

In terms of controls, the Sophy only has access to acceleration, braking, and left/right steering inputs. It can only modulate these inputs at a rate of 10 Hz, or roughly every 100 milliseconds. It does not have access to gear shifting, traction control, brake balance, or any other parameters normally available to human players.

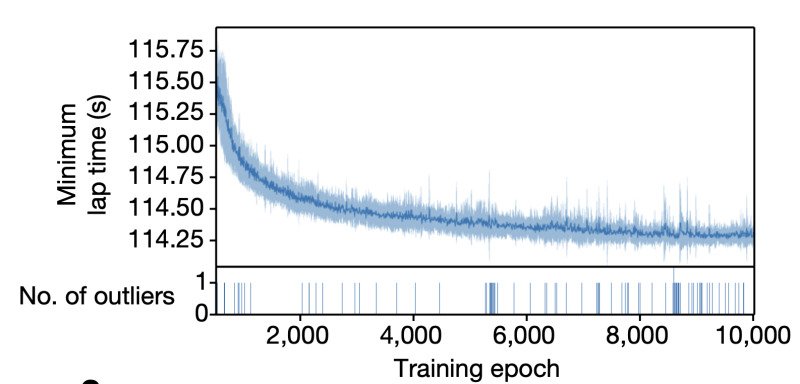

These environmental variables and limited input are presented to Sophie, and she then starts working. Using advanced “machine learning” algorithms, it drives the path over and over again. It’s “rewarded” – sportingly – by getting around the track in the least amount of time, and “punished” – again, in sporting terms – if you make contact with walls or other cars or go out of bounds.

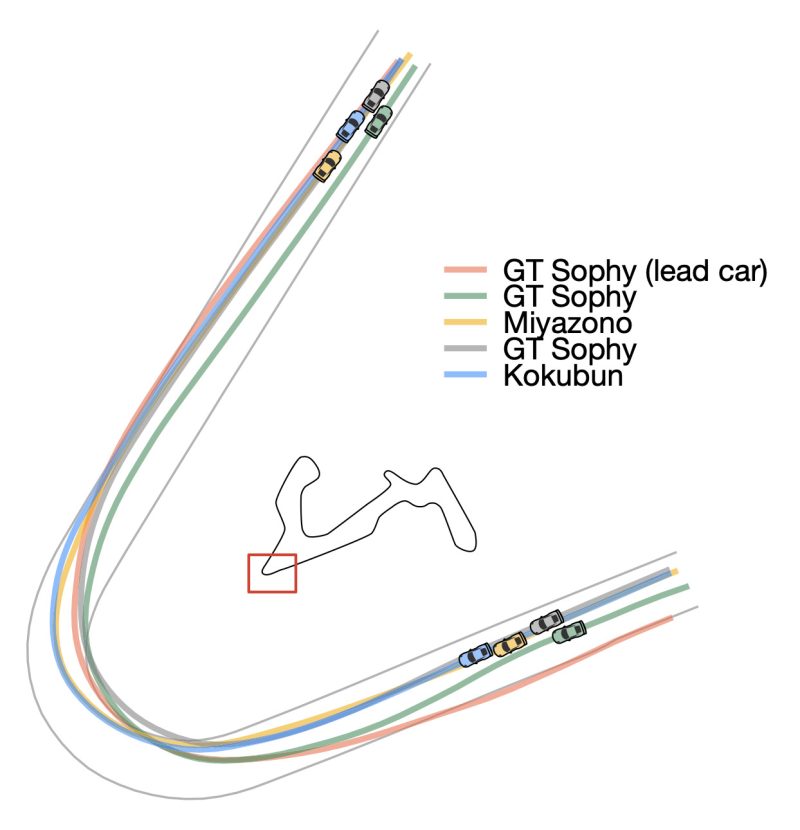

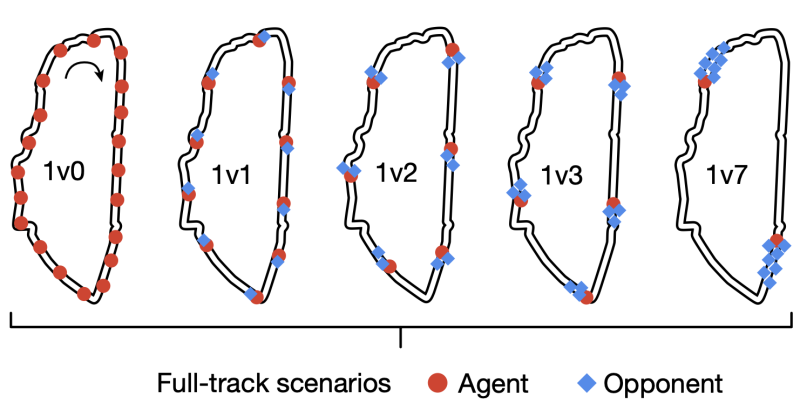

“GT Sophy was trained using reinforcement learning,” explained Sony AI America Director Peter Wehrmann. “Essentially, we gave her rewards for making progress along the track or passing another car, and penalties for when she leaves the track or crashes into other cars. To make sure she learned how to act in competitive racing scenarios, we put the dealership in a lot of different racing situations with several different types of opponents. With enough practice, through trial and error, he was able to learn how to handle other cars.There was a very fine line between being aggressive enough to keep your driving line and being too aggressive and causing accidents and getting penalties “.

Worman went on to describe the toughest challenges in actually processing data. The hard part was figuring out how to present this information to neural networks in the most efficient way. For example, through trial and error, we found that coding about 6 seconds of oncoming track was enough information for the GT Sophy to make decisions about its driving lines,” he explained. “Another big challenge was balancing reward and punishment cues to produce an agent that was both aggressive and sporty.” the good.”

Sophy does all this in real time, on the real PlayStation 4s running a special edition of Gran Turismo Sport that reports required positional data and accepts control inputs over a network connection. Sophy code is executed by servers that communicate with PlayStations over the network. To help speed up the process, Sophy controls 20 cars traveling on the track at the same time. Results are fed into servers equipped with NVIDIA V100 or A100 chips and server-level GPUs designed to process AI and machine learning data.

It’s important to note that this kind of computing power is only needed to “create” Sophy, not run it. The machine learning process eventually leads to “models” that can then be implemented on more modest hardware.

“Sophy learning is processed in parallel with computing resources in the cloud, but if you are just implementing an already acquired network, then the on-premises PS5 is more than enough,” Kazunori Yamauchi explained. “This asymmetry of computing power is a general feature of neural networks.”

How is Sophie different

AI in racing games has always been a kind of “black box”. Game developers rarely discuss how it actually works, but it’s an important part of racing games that all players interact with. We’ve been curious to learn more about how Gran Turismo’s AI has worked in the past and what makes Sophy so different.

As Kazunori Yamauchi has shown us, the machine learning process provides Sophy with more rules of behavior than human programmers can devise, but this strategy comes with its drawbacks as well.

Yamauchi-san explains, “Artificial intelligence has so far been rule-based, so it basically works as an ‘if-then’ program.” But no matter how many such rules are added, it can’t handle conditions and environments other than those specified. On the other hand, Sophy generates a huge amount of implicit rules that humans cannot handle, within its network layer. As a result they are able to adapt to different conditions and environments. But since these rules are implicit, this means that it is not possible to make them learn a “specific behavior” that would be simple for a rule-based AI. “

How would Sophie appear in Gran Turismo 7

Although Sophy has been developed over the past few years using Gran Turismo SportThe technology will appear for the first time in reality Gran Turismo 7 In a future update of the game. Kazunori Yamauchi’s announcement was light on details, so it was something we were excited to ask him about.

“It is possible that Sophie will appear in front of the player in three forms,” Yamauchi-san explained. “As a teacher who teaches driving to the players, a student who learns sportsmanship from the players, and as a friend who races with him. I would not rule out the possibility of setting Spec B, where the player is the race director and Sophie is the driver.”

Sophy can also be used as a tool in the game itself. “In principle, it is possible to use Sophy for BOP settings,” Yamauchi added. “If it was just about aligning the lap times of the different cars, it can be done now. But since BoP settings aren’t just about lap times, we won’t leave it all to Sophie, but it will definitely help create BoPs.”

Sophie is still learning

Once Sophy was revealed, we were curious to learn more about her limitations. The Sony AI team is fully aware of how Sophy can improve and the technology itself is still under active development.

For example, in the current iteration, Sophy is trained on specific paths in specific conditions, but the team expects the technology to be able to adapt. “These versions of the GT Sophy were trained on specific combinations of vehicle tracks,” Warman explained. “Improving the dealer’s driving performance on par with modifications to the vehicle’s performance is part of our future work. This version of the GT Sophy has also not been trained for environmental differences, but we expect the technologies to continue to work under these conditions.”

As Sophy debuted as a super driver capable of defeating the world’s best Gran Turismo players, questions and concerns immediately emerged about her ability to adapt to less competitive human drivers.

According to Peter Wehrmann, the Sophy can adapt by literally driving like a newer driver rather than just artificially decelerating. “This is also part of our future work,” explained the director of Sony AI America. “Our goal is to create a factor that, when in a ‘slow’ mode, drives like a less experienced driver, rather than being handicapped in some way, such as arbitrarily accelerating or slowing down in violation of physics.”

Sony AI’s initial goal was to develop faster and more competitive AI, which they could then build on to develop a general-purpose tool that would make the game more enjoyable for everyone. “Our goal with this project was to show that we can create an agent that can race with the best players in the world. Our ultimate goal is to create an agent that can give players of all types an exciting racing experience,” Warman emphasized.

More details

The research and development that goes into today’s video games – especially the Gran Turismo games – are usually protected as trade secrets. This makes Sophy’s development transparency more refreshing and incredibly cool for those interested.

If you’d like to dig deeper and learn more about Sophy’s inner workings, you can read the full peer-reviewed paper in the February 10, 2022 issue of nature Scientific journal. Article and summary Available for download with subscription. For free access to natureCheck with your local library or university.

We’re sure to know more about Sophy yet GT7It was released on March 4, 2022. And as usual, we’ll keep a close eye on any news as soon as it’s revealed. Stay tuned!

Watch more articles on Gran Turismo Sophy and Kazunori Yamauchi.

“Avid travel ninja. Devoted pop culture fanatic. Freelance coffee enthusiast. Evil analyst.”

More Stories

5 reasons to follow a Data Engineering bootcamp in Canada

The Nintendo Switch 14.1.2 system update is now available, here are the full patch notes

Kojima assures Sony fans that he’s still working with PlayStation